Aria Gen 2 represents a bold leap forward in wearable research tools, combining advanced sensor systems, robust on-device intelligence, and unmatched comfort. Developed as part of Meta’s Project Aria, Aria Gen 2 empowers researchers worldwide to push the boundaries of machine perception, contextual AI, and robotics through real-time data collection and high-fidelity interaction with the physical world.

The device is not a consumer product, but a platform for academic and industrial research—designed to serve as a launchpad for the next generation of context-aware computing.

Table of contents

- Enhanced Wearability and Ergonomic Design

- Breakthrough Computer Vision Camera System

- New Integrated Sensors for Comprehensive Environmental Awareness

- Precision Time Synchronization

- On-Device Real-Time Machine Perception

- Real-Time Data Pipeline for Multimodal Research

- A Platform for the Next Generation of Contextual AI

- Where to See Aria Gen 2 in Action

- Conclusion

Enhanced Wearability and Ergonomic Design

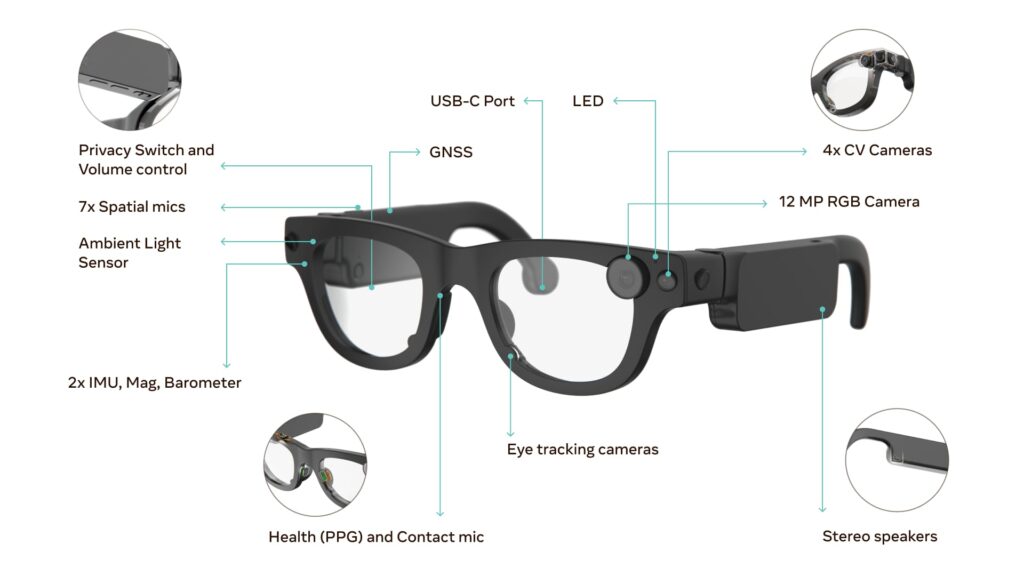

Aria Gen 2 improves upon the original Aria glasses with a more adaptable and user-friendly form factor. Weighing only 74 to 76 grams, the device is lightweight and now includes foldable arms, enhancing portability. Its design includes eight distinct size variants to accommodate diverse facial structures, making long-term usage more comfortable for a wide range of users.

Each version of Aria Gen 2 considers critical human factors such as head width and nose bridge depth, ensuring precise sensor alignment and sustained comfort.

Also check this video

Breakthrough Computer Vision Camera System

Aria Gen 2 is engineered with four high-performance computer vision (CV) cameras, doubling the sensor count from Gen 1. These cameras offer a 120 dB high dynamic range (HDR), a significant upgrade over the previous 70 dB system. This wide dynamic range ensures excellent visibility and accuracy in both bright daylight and dim indoor conditions.

The glasses also feature:

- Wider Field of View (FOV): Essential for broader scene understanding and advanced gesture tracking.

- 80° Stereo Overlap: Compared to Gen 1’s 35°, this enables high-quality depth perception and 3D reconstruction capabilities through stereo foundation models.

These improvements open up applications in environmental mapping, object localization, and robotic vision where precision and consistency are critical.

New Integrated Sensors for Comprehensive Environmental Awareness

Aria Gen 2 introduces several new sensors that expand the glasses’ capability to interpret and interact with the world around them.

Ambient Light Sensor (ALS)

A calibrated ALS enables fine-tuned exposure control and scene recognition by distinguishing between indoor and outdoor lighting conditions. The sensor’s ultraviolet mode adds further depth to environmental classification, supporting adaptive vision algorithms.

Contact Microphone

A contact microphone embedded in the nosepad captures user speech even in noisy or windy environments, a scenario where traditional microphones often fail. This enables robust voice-based data annotation and interaction in challenging research conditions.

Heart Rate Monitoring

A photoplethysmography (PPG) sensor, also housed in the nosepad, provides heart rate estimates of the wearer. This capability supports studies that integrate physiological response tracking with environmental and behavioral data—key for research in stress, emotion detection, and human factors.

Precision Time Synchronization

One of the major technological milestones in Aria Gen 2 is its support for hardware-based device time alignment. Using Sub-GHz radio technology, the device can synchronize with other Aria Gen 2 glasses or compatible devices at sub-millisecond precision.

This advancement allows researchers to perform multi-device scene captures with tight temporal alignment—essential for developing multi-view machine perception datasets, crowd-sourced environmental analysis, and collaborative robotic systems.

On-Device Real-Time Machine Perception

Aria Gen 2 includes a custom-built coprocessor developed by Meta, optimized for energy-efficient real-time machine perception. This processor enables several sophisticated capabilities, all processed directly on the device:

Visual Inertial Odometry (VIO)

By fusing data from visual and inertial sensors, the glasses can track six degrees of freedom (6DOF) in space. VIO allows Aria Gen 2 to build spatial maps and localize itself in complex environments—critical for autonomous navigation, spatial computing, and augmented reality development.

Eye Tracking

A cutting-edge camera-based eye tracking system delivers:

- Per-eye gaze vectors

- Vergence point estimation

- Blink detection

- Pupil diameter and center

- Corneal reflection analysis

These outputs are vital for attention modeling, intent prediction, and natural human-computer interaction, paving the way for applications in AR interfaces, neurocognitive research, and adaptive learning systems.

3D Hand Tracking

Aria Gen 2 includes full 3D hand tracking, capturing articulated joint poses within the device’s frame of reference. This enables high-fidelity hand annotations and supports development in dexterous robotic manipulation, gesture-based control systems, and immersive interaction prototypes.

Real-Time Data Pipeline for Multimodal Research

Aria Gen 2 is not just a sensor hub—it is an integrated data pipeline that streams multimodal data in real-time. Whether used to train foundational models, generate annotated visual datasets, or prototype AI agents, the glasses offer a scalable solution for researchers working on embodied intelligence.

This capability, combined with Aria Gen 2’s open research infrastructure and Meta’s toolchain, makes it easier than ever to develop and test cutting-edge context-aware machine learning models.

A Platform for the Next Generation of Contextual AI

Researchers using Aria Gen 2 gain access to a rapidly expanding ecosystem of tools, datasets, and developer support. The glasses serve as a reference platform for Meta’s long-term vision of ambient, embodied AI—AI systems that understand not just what we say, but where we are, what we see, and how we feel.

By offering highly accurate multimodal inputs in real-world environments, Aria Gen 2 enables rapid experimentation in domains such as:

- Human-robot interaction

- Wearable cognitive augmentation

- Real-time spatial computing

- Personalized health monitoring

- Distributed environmental sensing

Where to See Aria Gen 2 in Action

The Aria Gen 2 system will be showcased at CVPR 2025 in Nashville, Tennessee, with live demonstrations at Meta’s booth. Researchers can experience the glasses’ capabilities firsthand and learn more about ongoing collaborations and upcoming application opportunities.

Interested researchers can join the Aria Gen 2 interest list, with broader application access expected later this year. Meanwhile, the Aria Research Kit with Gen 1 is still available for those looking to begin development immediately.

Conclusion

Aria Gen 2 represents the forefront of wearable AI research platforms. By integrating next-gen sensor hardware, real-time machine perception, and ergonomic design into a single device, it unlocks a new realm of possibilities for academic and industrial researchers alike. It is not just a wearable—it is an AI-powered research lab on your face, ready to change the way we perceive and interact with the world.

Source : https://ai.meta.com/blog/aria-gen-2-research-glasses-under-the-hood-reality-labs/